How we switched to regular mobile app releases

Since a short time ago, inDriver has been rolling out new mobile releases weekly. Below, we will tell you about how we switched from regular releases.

Some background information

In 2018, there were four development teams at inDriver: Android, iOS, Backend, and QA. They had about 30 employees, 10 of whom were mobile developers. At that time, new app releases were rolled out only on a when-needed basis — mostly when the big tasks were complete and ready.

There were several problems with this approach:

- It was unclear when the next release was going to come out. It was difficult to predict the release of a new feature, as the timeline of large tasks might take anything from a few weeks to a few months.

- You had to wait a long time for the release of small tasks. There was a backlog of minor improvements and fixes awaiting release, which could already have benefited our users.

- Long turnaround times for releases. As too many tasks were involved in the release, there was a high probability that many bugs would be identified, requiring more time to look into. With significant changes being made, it was harder to catch all the bugs before release, so the debugging process involved multiple hotfixes, thus contributing to postponements to the full-fledged release. And that’s to say nothing of the tasks that “had to be included for sure, let’s move back the release date a short while, there’s only a little bit of work left to do.”

- Development slowdowns during the release testing phase. All new features got stuck at the “Ready for Test” status because the bulk of the testing personnel’s efforts was diverted and fully focused on testing the release for a few days. Additionally, at that time, no new features could undergo a test.

This situation continued until 2020. In late 2020, we increased the number of development personnel, implemented a major transformation in the development structure, and moved over to operating cross-functional teams. And whereas in the recent past you only had to coordinate your release within one team, now there were 20 such teams to consider and make arrangements with. The mobile developers who used to be committed to the shared repositories were now on different teams, and this imposed extra restrictions on the release of the app. After all, each team wanted to release their own tasks in alignment with their own plans and priorities.

Something had to be changed. We decided to set up a dedicated team to handle release management issues. That was how the release team emerged.

Switching to regular releases

Once it was established, the first step our release team took was to shift to regular releases. The plan to follow was like this:

- Establish a release cycle.

- Set the day of the week on which to create a release branch.

- Set up a release schedule.

By this point, we had already made several attempts at transitioning. Based on this experience, we outlined a clear-cut approach to managing releases. We knew for a fact that a two-week release cycle with alternating platforms would suit us just fine. Previously, we had tried a three-week cycle, but we were not happy with the delivery speed and the fact that the releases in this case were fairly large, which affected the testing process. Therefore, deciding on a release cycle length was a quick choice to make.

We chose Friday as the day of the week on which to create a release branch. We had several reasons for this:

- It was a good fit because it synced with the end of the work week and the completion of sprints.

- Releasing on a Friday protects the guys from too much overwork on weekends trying to keep the release on schedule.

- Release validation efforts kicked off on Monday because, at that time, it took several days to run a release verification routine. Hopefully, there would be enough time to check the release during the week.

Once the release cycle and the cutoff date were defined, we proceeded to establish a release schedule in Google Sheets:

Release Schedule

Release Schedule

But that was not all. It took several months for the teams to get used to the new process. As it turned out, no one was prepared to wait two weeks until the next release, so a lot of team members actively threw in tasks of their own. Due to this, multiple errors arose during release checks, and this pushed back the timeline.

But there were some positive changes as well:

- Now, not as many tasks were getting into the release anymore, and they became smaller.

- The delivery process for our product accelerated. Smaller tasks were steadily reaching users now.

- It had now become possible to plan out your tasks based on the release schedule.

Generally speaking, a more transparent process emerged. The releases became regular and small. But along with this came a large amount of routine work. Plus, the lengthy testing process for the release was still there to go through. Therefore, automating the process and speeding up the release verification were the next issues at hand that needed addressing.

Automating the release process

The process of releasing an application consists of several stages:

- Preparing a candidate release.

- Acceptance testing.

- Uploading and sending the release to the store for review.

- Rolling out and monitoring the release.

- If all goes well, the release is rolled out to all users.

Each of the stages involves a particular set of steps. For example, the following steps are performed at the stage of the release candidate’s development:

- Creating a release branch.

- Creating a task for the release.

- Creating a pull request.

- Building a release app.

- Bumping the app version.

- Creating a release changelog.

- Sending release notifications.

We decided to automate routine work as it was taking up too much time. To automate all of the above steps, we wrote a large number of scripts and are now actively developing our internal service for releases. Here is our technology stack:

- GitHub Actions.

- Bash, Python, Go.

- Fastlane.

- Docker.

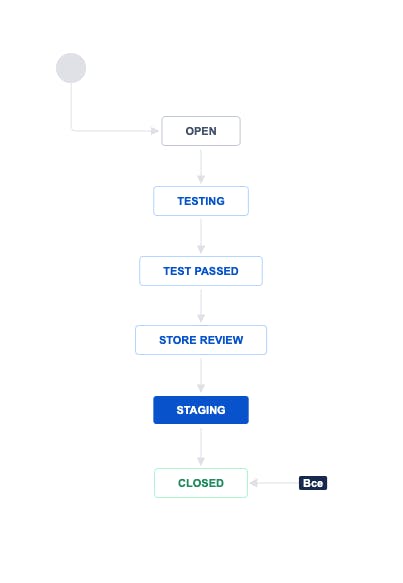

The first thing we did was to prepare a source of release information. We created a new type of Release task in Jira and presented the release steps as a workflow:

Release Workflow

Release Workflow

This strategy enabled us to have a clear idea about which stage the particular release had reached. Previously, you had to check in with the people involved in preparing the release. Now there was no need to ask anyone.

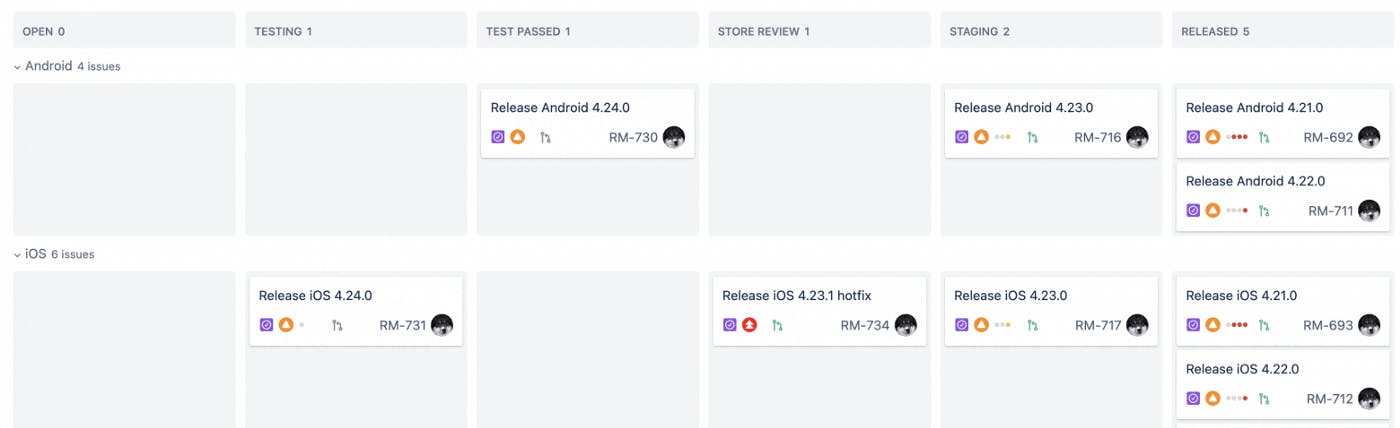

We gather the necessary information in the release tasks. You can view a list of tasks, build numbers, and command statuses, by subtask. In the testing phase, the progress of individual teams can be tracked via these subtasks.

If any bugs or errors are discovered, they are tied to the release task at hand. In this manner, we can check the current status of the release: to see how many bugs are in the works and which ones are not being investigated.

A separate dashboard can be used to keep track of release statuses:

Dashboard

Dashboard

The release task is created on Fridays at midnight. Scripts responsible for the work, which is now routine, are triggered and run in Github Action, as scheduled.

The bottom line:

- We have improved the transparency of the release process. Now, the status of releases and a list of tasks included in them can be viewed.

- The amount of routine time-intensive work is now significantly reduced.

- The release process for both platforms is now standardized.

Speeding up the release verification process

As I mentioned in the beginning, in 2018, our primary testing personnel had to be diverted to attend to the release verification responsibilities. At that time, no new features could be tested, and this slowed down the development process during release testing. To solve the problem, we decided to run checks through the efforts of our release team only.

We assigned four testers to the team, thus relieving the workload off of the other teams. In addition to checking the release, the guys were busy streamlining the release process, writing documentation, onboarding newcomers, and updating test cases in the TMS.

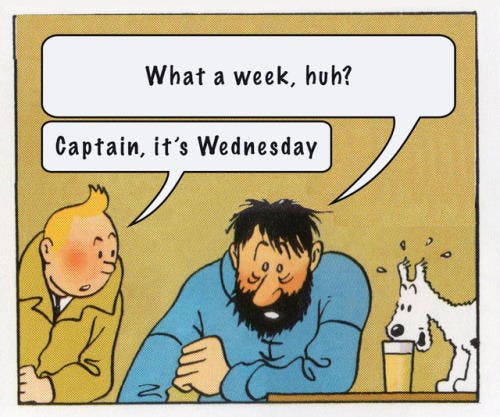

However, this approach revealed a number of downsides:

- It is tiring to go through the lengthy checks for the release.

- If someone on the team was unable to participate, the other guys were faced with an immediate increase in workload to deal with.

Relatable

Relatable

- You start missing mistakes as your eyes get too used to looking at your work.

- There’s not much coverage on devices.

- You have to be a good expert on all the features to quickly check the entire application.

- You need to have well-written test cases to check unfamiliar functionality.

This went on for several months. Afterward, taking the shortcomings identified into account, we decided to scale up the test to embrace the entire testing team.

Things went much better after that:

- The release verification process was accelerated significantly.

- More coverage on actual devices.

- Concurrently, we improved the knowledge of the product and its verticals among all testers, thus further facilitating potential replacements within the team or access to help in critical situations.

It became clear that it was now essential to automate the release verification process in order to cut down testing time. Together with the team of automation developers who were tasked with developing various testing tools, we went on to actively implement UI tests, to validate the release. To achieve this purpose, we use the following tools:

- Appium.

- Kotlin, JUnit 5.

- Docker, Selenoid.

- GitHub Actions.

At this point in time, UI tests have covered about 30 percent of the total test cases in the acceptance set. And to keep these tests green and detect bugs in advance, we run them on a daily basis, at night.

To recap the highlights here:

Switching to regular releases cleared away most of our problems:

- We set up a release schedule. Our teams now have the ability to plan out their tasks, and it became possible for businesses to plan launches and know the dates of the next releases in advance.

- The bulk of the routine time-intensive jobs was automated. This allowed us to focus on other tasks, such as those intended to actively implement UI tests.

- We improved the transparency of the release process. It was now possible to track the status of each release and view the necessary information.

- The release validation time was reduced to one day, on Monday, and that time frame is rapidly becoming shorter through automation.

Even so, we realized that a two-week release cycle with alternating platforms had a long delivery time for new features. As teams were still unprepared to wait 2 weeks for the next release and then a full rollout of the application, they tried to throw as many extra tasks into the release as possible. Prior to the release cutoff, there was a long queue of tasks for infusion, and this put significant stress on CI. Plus, the teams had asynchronous sprints due to the alternating of the platforms.

With this in mind, the goal for the next year was to shift to weekly releases. To accomplish this, we conducted a one-week release experiment at the end of 2021 and gathered feedback from the team. As a result of the experiment, several problem areas that needed improvement were identified. In addition, some things had to be completely replaced.